Every startup ponders over-scaling risks. Vibe code a dumb app and be OK with a fail whale? Or build 50 microservices auto scaled in Kubernetes with a full devops team load balancing every layer?

You’re probably better off with the former as long as you can tolerate it.

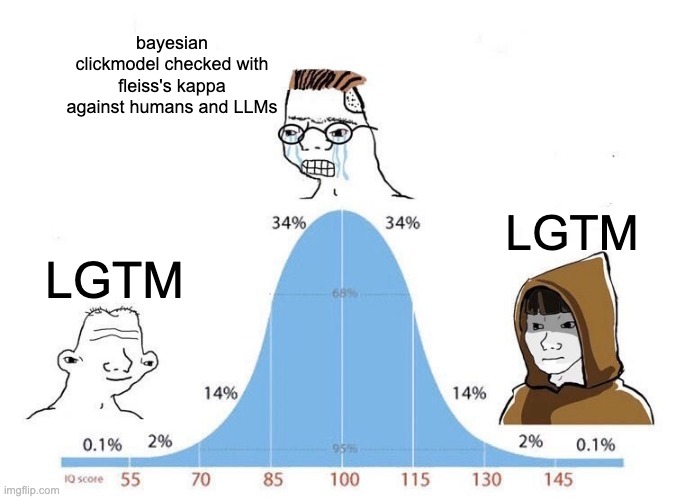

Same thing can happen for evals in AI apps. The smartest teams can over invest before experiencing any success.

Sooo many teams go through this obsessing about search metris waaaay too much:

Evals aren’t a one time thing. Like other parts of our company - they’re something we get better at over time. Teams get stuck building evals and not actually deliver value. It can be a bit of a tarpit as teams debate the meaning of labels, clicks, conversions in search results.

Painful search projects I’ve lived through:

- a major consulting engagement with a job search company we spent months building clickstream analytics so that we could eventually build good search: the customer lost interest, a reorg happened, and the engagement was canceled before we delivered any actual search improvements.

- at Shopify trying to build the perfect downsampling approach that correlates with A/B tests. We shipped a lot but we could have shipped more if we got to prod faster

- at Reddit, we got stuck for too long with Learning to Rank as a skunkworks side thing not actually tested in prod. When we did, we encountered the real infrastructure and evaluation problems we avoided being a side thing.

The main lesson in all of this - don’t wait too long building a perfect feedback loop. Get to prod and real customers ASAP. The market is the ultimate eval.

Gradually layer on tiny improvements to your eval process over time. Don’t wait to improve search and AI apps waiting for unachievable perfection

What should you do?

After 12 years of failing (and sometimes succeeding) at search, I trust this proven process™️ as the starting upoint

- Test in prod

- (With Feature flags!)

- Focus on systematic qualitative eval

- ‘Unit testing’ algorithmic behavior is a good thing

- Evolve to qualitative the quantiative

Test in Prod - get your code out of a lab environment. Don’t let your changes stay local or off on a branch. Or stuck on staging. Get them to prod. Iterate on them there with real users and traffic, not synthetic traffic. Make sure what you built scales and is something you can operate. Then get real feedback from real users in the real app.

With feature flags - be able to toggle your approach on in prod on/off. I love feature flags. they’re a baby step to A/B testing when you are ready for that level of maturity. They’re easy to add and manage without needing to build out complex analytics.

Focus on systematic qualitative eval - if you’re doing search, every team I’ve worked on has evolved to have a qualitative sanity check where we compare search results side by side. This final check cuts through a lot of BS and makes sure any quantitative steps along the way aren’t borked. Focus on what queries have changed. Get people to tell you which search result listing they prefer. Do this blindly - don’t tell anytime which side is the new change or not.

‘Unit test’ basic cases - a lot of fuzzy cases you associate with some machine learning algorithm can be automated as unit tests. Like “I searched for a bluetooth speaker, were the results actually in category:speakers” is objective and way easier to understand than traditional, highly technical search quality metrics. Hold off using those metrics as long as you can.

Evolve to a quantitative approach - everything above has the beginnings of a flywheel. When users notice a problem, you can start to gather labels - what ARE good/bad results for this search query? Get a dozen or so examples, treat this as a unit test for this use case.

This is my ideal starting point for a search app with no existing eval practices. Get the team participating in qualitative evals. Start to gather some adhoc, certainly imperfect, evals on use cases that need to be fixed. Play with fixing these locally - beginning to create a “CI” of metrics for these queries to make sure they stay fixed (more on this in a future article). But don’t oversell these evals as anything but a regression test, until you build a case to take things to the next level.

None of this requires an early data science hire. Or a mature data culture. Starting simple and showing a bit of value can help build a case for such a thing. Beginning, however, with the PhD level complicated data science your stakeholders won’t understand will be counter productive. Start instead with what they likely understand - a feedback loop that looks like regular app development, with a toe towards the quantitative evals you eventually would like to build towards.

If you need help with any of this, get in touch, I’m available for consulting.

Enjoy softwaredoug in training course form!

Starting Feb 2!

I hope you join me at Cheat at Search with LLMs to learn how to apply LLMs to search applications. Check out this post for a sneak preview.

I hope you join me at Cheat at Search with LLMs to learn how to apply LLMs to search applications. Check out this post for a sneak preview.