This is a preview of my upcoming course Cheat at Search with LLMs

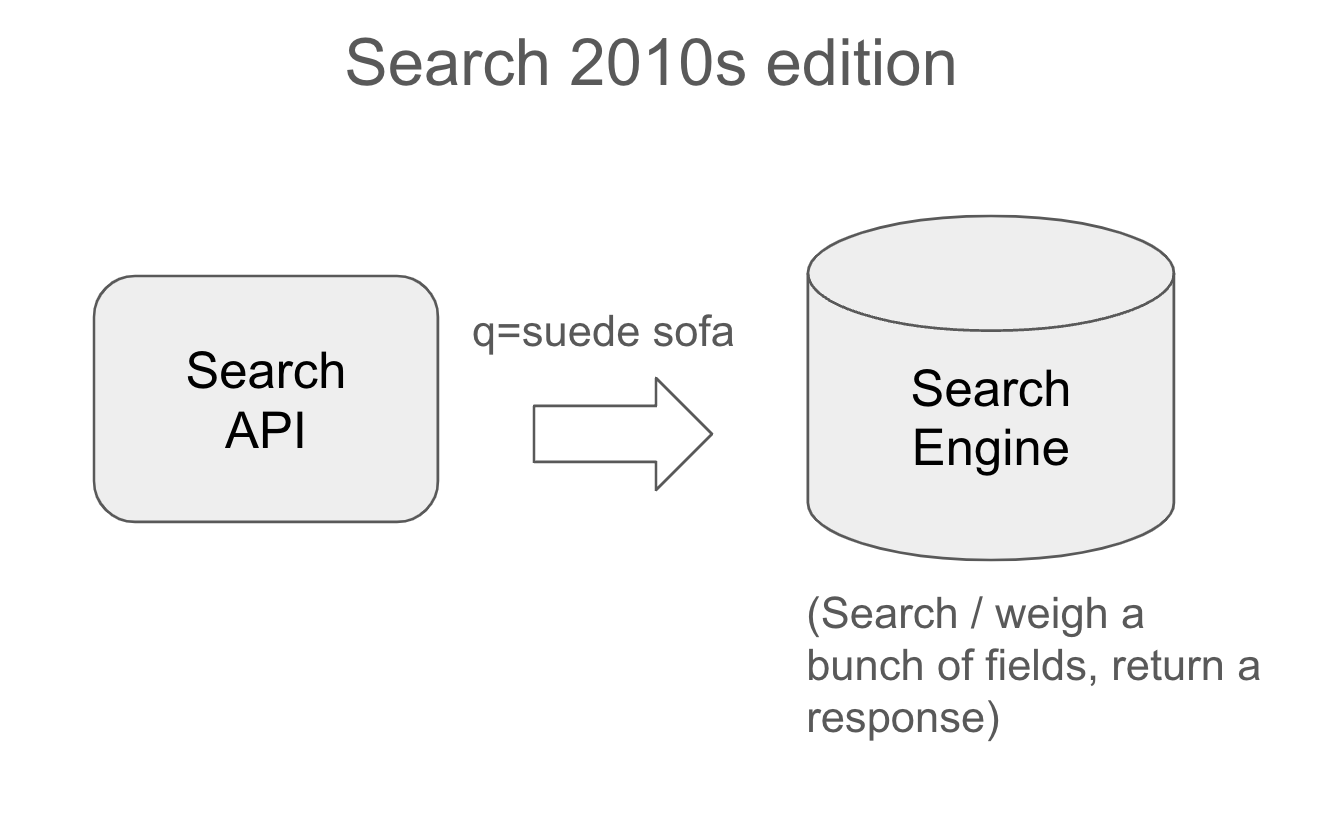

In the past, we lived a kind of lie - that search queries were “unstructured” strings. We forwarded them onto a bunch of text fields/vector indices for matching, combined their scores, and ranked and returned results.

Long have the wisest amongst us argued against such an approach. Some argued that search is never unstructured and they’re right. In fact, I’ve been one of the wrong ones to be so focused on ‘relevance’ as in ‘ranking’ as some holy thing rather than structure and understanding.

We used to build the system below. Just send the query into the search engine - rely on the knobs and dials that Solr, Elasticsearch, ?? have for us. We did this in part because, as search people our lives began and ended (15 years ago) at a search engine configuration.

We were like database administrators, but instead of tuning SQL query performance, we tweaked boosts, custom-built synonym lists, played with fuzzy match settings, and tokenization.

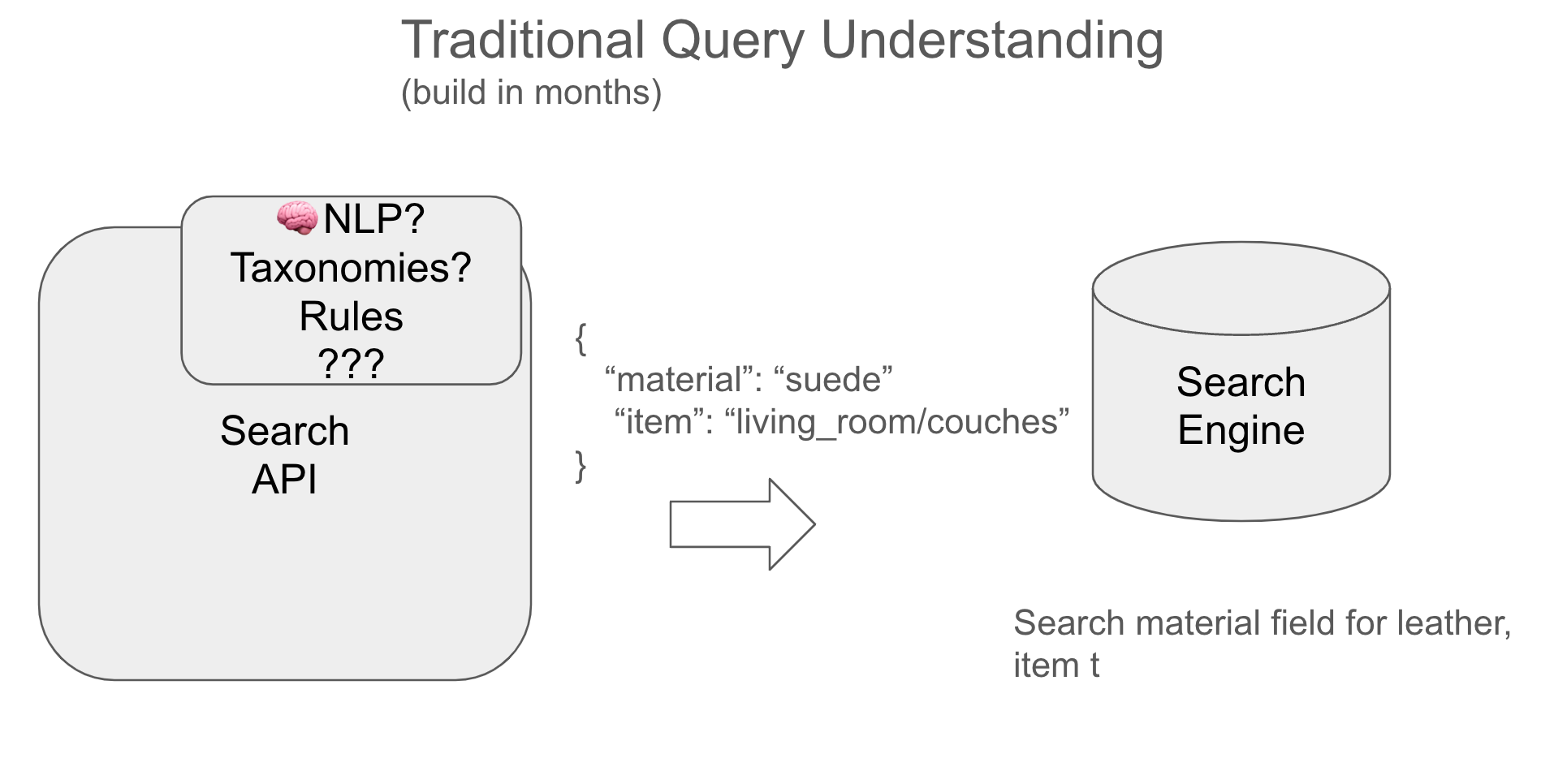

Cool but expensive - query/content understanding

Eventually, our team got wise to this idea of query (and content) understanding. For those of us willing to make the investment, we would structure our queries into a structure more conducive towards our data (and structure the data to target our queries). We built systems with models that enriched our index and queries.

Now this was an investment! We had to hire more specialists. Especially early 2010s, building and validating say a query classifier or developing a knowledge graph was a daunting task. You had to hire expensive consultants (like me!) to do that work.

Teams not eager to hire and grow a true search practice avoided this approach. Some teams might do one or two promising low-hanging query/content enrichment project, but you weren’t going to invest months into a system that might not pay off.

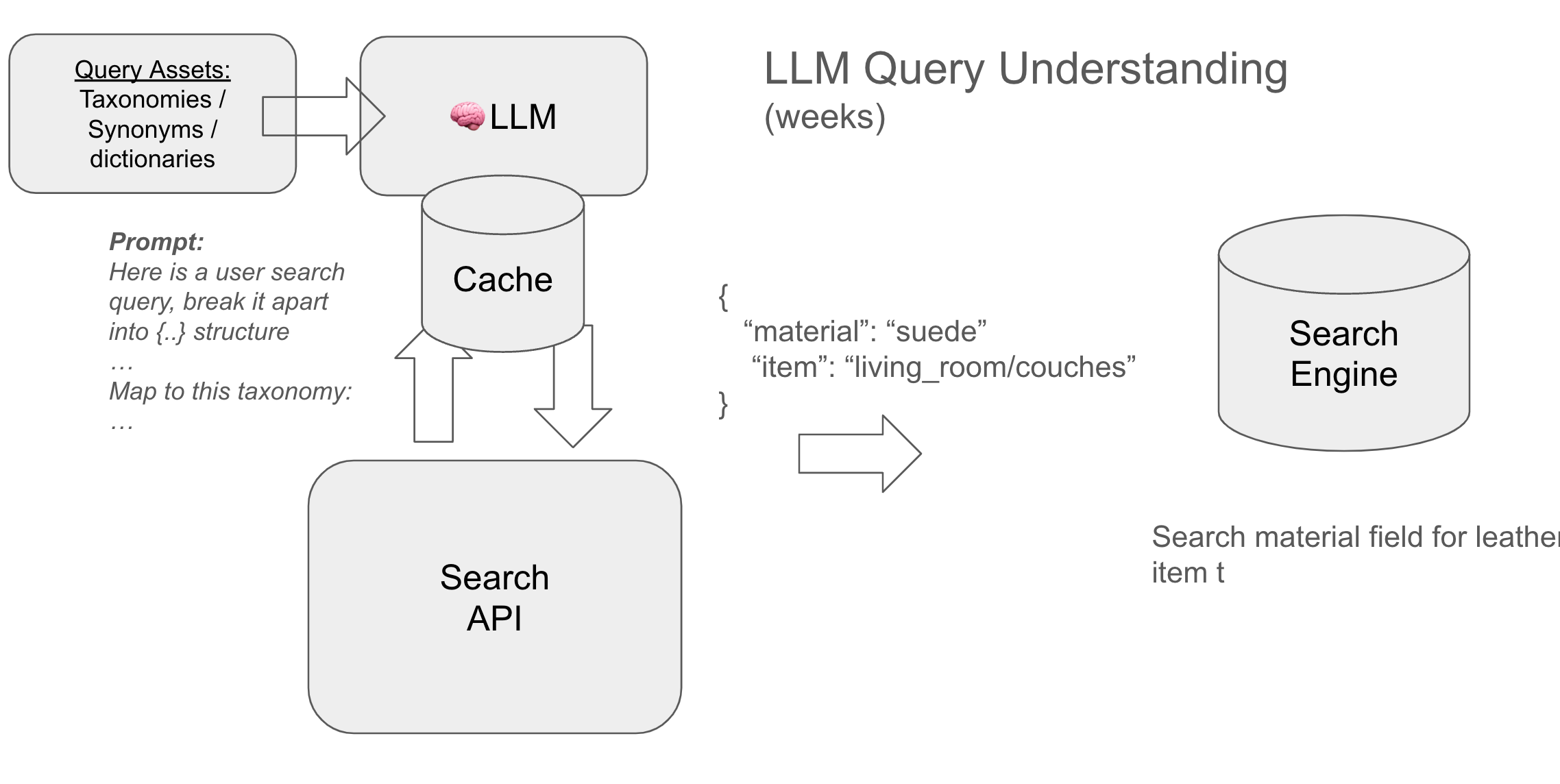

Today - Cheap and easy enrichment

Now we are at a completely new point. We can get 80% of the value of many forms of query / content enrichment with little effort. Many times, even a small open source LLM will be able to turn a search query into reasonable structure at relatively low cost. We can then cache the bejesus out of it.

In short we can build something like:

It’s not clear why you WOULDN’T do this right now. Maybe a year or two ago, when you would have to rely on a slow/expensive call to OpenAI it wouldn’t make sense. But these days we can do the LLM inference ourselves with pretty-good local models. We can cache the most frequent queries - leaving the long tail to do direct inference - but avoiding the LLM computation as much as possible.

In those 20% cases where we can’t do this live, we can still do this offline:

- Precompute the cache - while shown here inline, you can do this work in batches - computing query understanding over the most common queries you’ve recently seen

- A vector cache - Instead of caching directly on the string, use a semantic cache - a vector index lookup to a similar query by embedding

- Build training sets - We can use this approach to seed training data for the traditional query understanding machine learning we might do

Really its about mindshare

Finally, in conclusion, the reality is fast becoming your average ML engineer spends more time with LLMs than traditional ML models. Our jobs are less about pytorch and more about talking to ollama. If you stay on the “traditional” way of doing things, you risk moving away from developer mindshare. Most developers will have moved to a new way of thinking about the search problem

Enjoy softwaredoug in training course form!

Starting Feb 2!

I hope you join me at Cheat at Search with LLMs to learn how to apply LLMs to search applications. Check out this post for a sneak preview.

I hope you join me at Cheat at Search with LLMs to learn how to apply LLMs to search applications. Check out this post for a sneak preview.